ReaderMeter: Crowdsourcing research impact

September 22nd, 2010 by darioReaders of this blog are not new to my ramblings on soft peer review, social metrics and post-publication impact measures:

- can we measure the impact of scientific research based on usage data from collaborative annotation systems, social bookmarking services and social media?

- should we expect major discrepancies between citation-based and readership-based impact measures?

- are online reference management systems more robust a data source to measure scholarly readership than traditional usage factors (e.g. downloads, clickthrough rates etc.)?

These are some of the questions addressed in my COOP ’08 paper. Jason Priem also discusses the prospects of what he calls “scientometrics 2.0″ in a recent First Monday article and it is really exciting to see a growing interest in these ideas from both the scientific and the STM publishing community.

We now need to think of ways of putting these ideas into practice. Science Online London 2010 earlier this month offered a great chance to test a real-world application of these ideas in front of a tech-friendly audience and this post is meant as its official announcement.

ReaderMeter is a proof-of-concept application showcasing the potential of readership data obtained from reference management tools. Following the announcement of the Mendeley API, I decided to see what could be built on top of the data exposed by Mendeley and the first idea was to write a mashup aggregating author-level readership statistics based on the number of bookmarks scored by each of one’s publications. ReaderMeter queries the data provider’s API for articles matching a given author string. It parses the response and generates a report with several metrics that attempt to quantify the relative impact of an author’s scientific production based on its consumption by a population of readers (in this case the 500K-strong Mendeley user base):

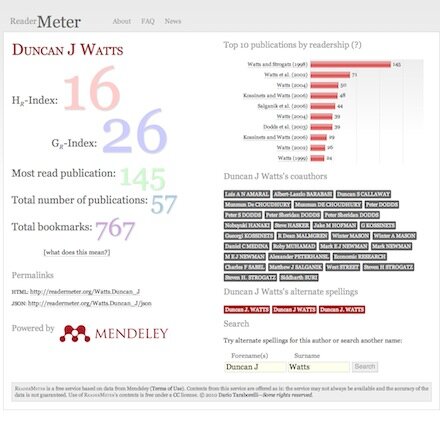

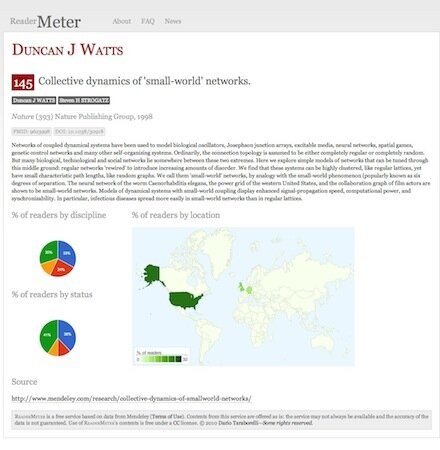

The figure above shows a screenshot of ReaderMeter’s results for social scientist Duncan J Watts, displaying global bookmark statistics, the breakdown of readers by publication as well as two indices (the HR index and the GR index) which I compute using bookmarks as a variable by analogy to the two popular citation-based metrics. Clicking on a reference allows you to drill down to display readership statistics for a given publication, including the scientific discipline, academic status and geographic location of readers of an individual document:

A handy permanent URL is generated to link to ReaderMeter’s author reports (using the scheme: [SURNAME].[FORENAME+INITIALS]), e.g.:

I also included a JSON interface to render statistics in a machine-readable format, e.g.:

Below is a sample of the JSON output:

{

"author": "Duncan J Watts",

"author_metrics":

{

"hr_index": "15",

"gr_index": "26",

"single_most_read": "140",

"publication_count": "57",

"bookmark_count": "760",

"data_source": "mendeley"

},

"source": "http://readermeter.org/Watts.Duncan_J",

"timestamp": "2010-09-02T15:41:08+01:00"

}

Despite being just a proof of concept (it was hacked in a couple of nights!), ReaderMeter attracted a number of early testers who gave a try to its first release. Its goal is not to redefine the concept of research impact as we know it, but to complement this notion with usage data from new sources and help identify aspects of impact that may go unnoticed when we only focus on traditional, citation-based metrics. Before a mature version of ReaderMeter is available for public consumption and for integration with other services, though, several issues will need to be addressed.

1. Author name normalisation

The first issue to be tackled is the fact the same individual author may be mentioned in a bibliographic record under a variety of spelling alternates: Rod Page was among the first to spot and extensively discuss this issue, which will hopefully be addressed in the next major upgrade (unless a provision to fix this problem is directly offered by Mendeley in a future upgrade of their API).

2. Article deduplication

A similar issue affects individual bibliographic entries, as noted by Egon Willighagen among others. Given that publication metadata in reference management services can be extracted by a variety of sources, the uniqueness of a bibliographic record is far from given. As a matter of fact, several instances of the same publication can show up as distinct items, with the result of generating flawed statistics when individual publications and their relative impact need to be considered (as is the case when calculating the H- and G-index). To what extent crowdsourced bibliographic databases (such as those of Mendeley, CiteULike, Zotero, Connotea, and similar distributed reference management tools) can tackle the problem of article duplication as effectively as manually curated bibliographic databases, is an interesting issue that sparked a heated debate (see this post by Duncan Hull and the ensuing discussion).

3. Author disambiguation

A way more challenging problem consists in disambiguating real homonyms. At the moment, ReaderMeter is unable to tell the difference between two authors with an identical name. Considering that surnames like Wang appear to be shared by about 100M people on the planet, the problem of how to disambiguate authors with a common surname is not something that can be easily sorted out by a consumer service such as ReaderMeter. Global initiatives with a broad institutional support such as the ORCID project are trying to fix this problem for good by introducing a unique author identifier system, but precisely because of their scale and ambitious goal they are unlikely to provide a viable solution in the short run.

4. Reader segmentation and selection biases

You may wonder: how genuine is data extracted from Mendeley as an indicator of an author’s actual readership? Calculating author impact metrics based on the user population of a specific service will always by definition result in skewed results due to different adoption rates by different scientific communities or demographic segments (e.g. by academic status, language, gender) within the same community. And how about readers who just don’t use any reference management tools? Björn Brembs posted some thoughtful considerations on why any such attempt at measuring impact based on the specific user population of a given platform/service is doomed to fail. His proposed solution, however – a universal outlet where all scientific content consumption should happen–sounds not only like an unlikely scenario, but also in many ways an undesirable one. Diversity is one of the key features of the open source ecosystem, for one, and as long as interoperability is achieved (witness the example of the OAI protocol and its multiple software implementation), there is certainly no need for a single service to monopolise the research community’s attention for projects such as ReaderMeter to be realistically implemented. The next step on ReaderMeter’s roadmap will be to integrate data from a variety of content providers (such as CiteULike or Bibsonomy) that provide free access to article readership information: although not the ultimate solution to the enormous problem of user segmentation, data integration from multiple sources should hopefully help reduce biases introduced by the population of a specific service.

What’s next

I will be working in the coming days on an upgrade to address some of the most urgent issues, in the meantime feel free to test ReaderMeter, send me your , follow the latest news on the project via or just help spread the word!

September 22nd, 2010 at 11:04 pm

Nice post, Dario; you do a good job of articulating the main problems facing ReaderMeter and similar efforts.

September 23rd, 2010 at 2:59 pm

Great stuff!

I might not be the most important example for ReaderMeter, but found a glitch in your Author name normalization (which I expected by the way

My Name is David Haberthür (note the “ü”), and if you’re searching for me via http://readermeter.org/Haberthür.David, nothing is found. But if you’re searching for me via http://readermeter.org/Haberthur.David, two publications of mine are found…

September 24th, 2010 at 2:53 am

You wrote:

His proposed solution, however – a universal outlet where all scientific content consumption should happen–sounds not only like an unlikely scenario, but also in many ways an undesirable one. Diversity is one of the key features of the open source ecosystem, for one, and as long as interoperability is achieved (witness the example of the OAI protocol and its multiple software implementation), there is certainly no need for a single service to monopolise the research community’s attention for projects such as ReaderMeter to be realistically implemented.

Obviously, what I suggested need not be a monopoly. A standard to which all parties agree is sufficient: a standard for author and paper disambiguation, a standard for citations types, bookmarked references, etc. Basically, a set of federated standards which makes it possible to get all relevant information from all the scientific literature via a standardized protocol. The opposite of the current balkanization, obviously. I thought I spelled this out clearly in my post, but realize that ‘place’ could be taken literally, despite my repeated reference to standards. Personally, as a user, I don’t care if ‘place’ is physical or simply the means by which I access the information I need. It is ludicrous to install numerous pieces of software and search in 4-5 different sites just to do something that would be 1-3 lines of code away if the appropriate standards were in place.

The current information infrastructure is organized according to 400 year-old standards, which are not even double-digit light years close to a ballpark in which they need to be for scientists to be able to handle the information they need to cover.

That’s the point I was trying to make: we need the equivalent of http, smtp, ftp, etc. and then I don’t care which browser, e-mail client or ftp-client you use.

September 24th, 2010 at 2:07 pm

[...] ReaderMeter: Crowdsourcing research impact (academicproductivity.com) [...]

September 24th, 2010 at 5:00 pm

nice article and nice website. do you know http://article-level-metrics.plos.org/ ? it’s a similar effort though not based on Mendeley.

the main challenge i see in “soft peer review” in the long run is cheating and spamming. if metrics from social bookmarks, downloads etc. will be used to calculate researcher impact, it will be very tempting for researchers to cheat the systems. maybe a nice read in this regard: http://sciplore.org/blog/2010/06/12/new-paper-on-the-robustness-of-google-scholar-against-spam/

September 27th, 2010 at 6:30 pm

, yes I am familiar with the article-level metrics programme at PLoS. I agree on the risks of gaming – and thanks for the link to the HT10 poster. It looks like the preprint does not present any actual results other than “yes Scholar can be spammed”. This doesn’t strike me as surprising and is consistent with the general perception of Scholar’s citation counts as being totally flawed. Funny how the take-home message of this work is that *citation* metrics can also fall prey to some very trivial form of spam.

October 3rd, 2010 at 1:08 pm

[...] a blog post last week, Dario Taraborelli officially announced ReaderMeter. ReaderMeter takes the usage data from [...]

October 11th, 2010 at 5:54 pm

Interesting concept. As example surgeons are not happy with the citation index, and think they are at a disadvantage compared to other medical fields. A very interesting combination would be to combine it with a “case meter” e.g. anonymized medical (imaging) data of the cited publication, within an open data concept.

October 12th, 2010 at 5:17 am

[...] of these developments have already started to reshape the landscape of research. The next wave will happen when we all have access to the massive data sets that have been held [...]

October 13th, 2010 at 1:57 pm

Its really a wonderful tool for the people of this field, and I hope it can bring about a great change. but the index of citation has some issue in that but somehow we can ignore that. Overall a very nice and innovative idea.

October 27th, 2010 at 9:57 am

[...] Academic Productivity » ReaderMeter: Crowdsourcing research impact RT : This has (digital scholarship) legs: ReaderMeter http://bit.ly/cdGwi8 (tags: via:packrati.us) [...]

November 29th, 2010 at 12:39 pm

[...] ReaderMeter: Crowdsourcing research impact (academicproductivity.com) [...]

March 24th, 2011 at 5:18 am

[...] ReaderMeter: Crowdsourcing research impact: [...]