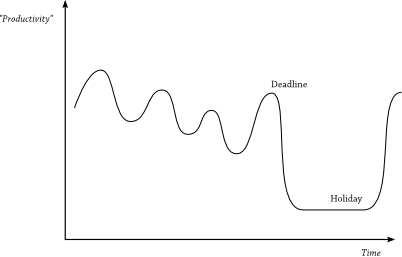

Testing the general model of productivity

August 5th, 2009 by jamesIn a previous episode, I suggested that productivity is really just an efficiency measure. Since the working currency for academics is arguably prestige, productive researchers are those that can acquire the most prestige for the least effort and this can be formally written as:

![]()

where each task t is assigned a prestige benefit (pt per activity × n activities) and an effort cost (attention units per hour at × ht number of hours).

The comments on the original post suggested that there was a lot of enthusiasm for implementing and testing the theory and so I’ve spent the past month gathering data and preparing for a bit of an empirical assessment. The results are a work-in-progress but I hope to keep the conversation going and get your feedback. Here then is a step-by-step guide to how I’ve analysed my productivity over the last month using the general model.